Citation

Please consider cite us if you find our dataset, or model is useful to you.

@inproceedings{pan2025locate,

title={Locate anything on earth: Advancing open-vocabulary object detection for remote sensing community},

author={Pan, Jiancheng and Liu, Yanxing and Fu, Yuqian and Ma, Muyuan and Li, Jiahao and Paudel, Danda Pani and Van Gool, Luc and Huang, Xiaomeng},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

volume={39},

number={6},

pages={6281--6289},

year={2025}

}

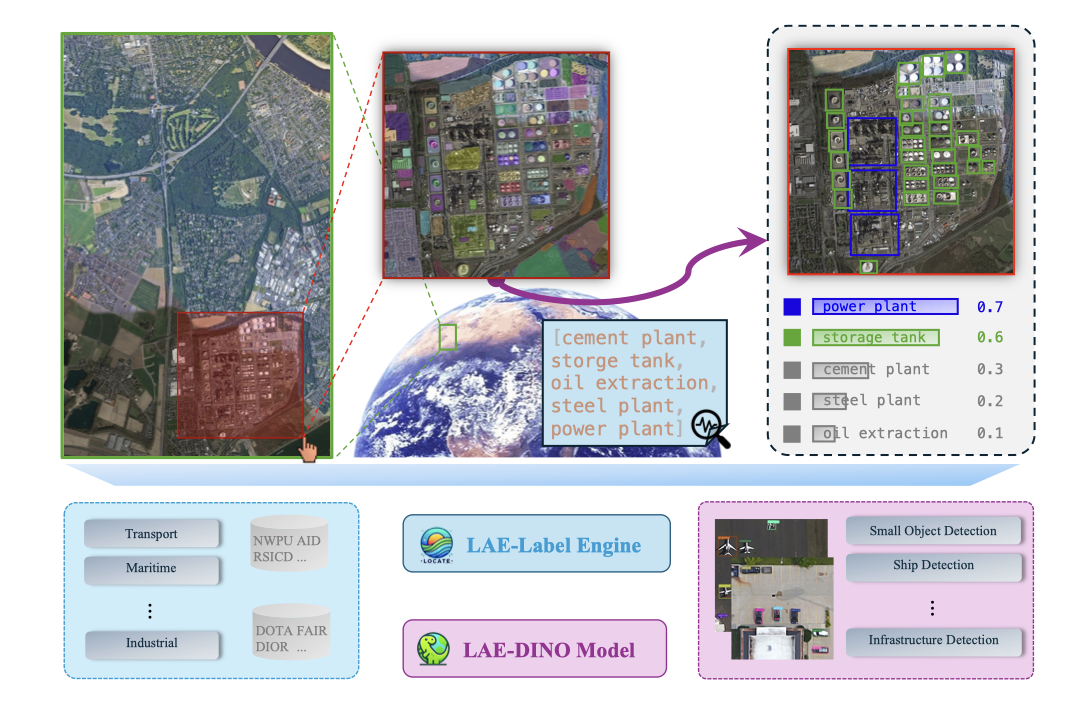

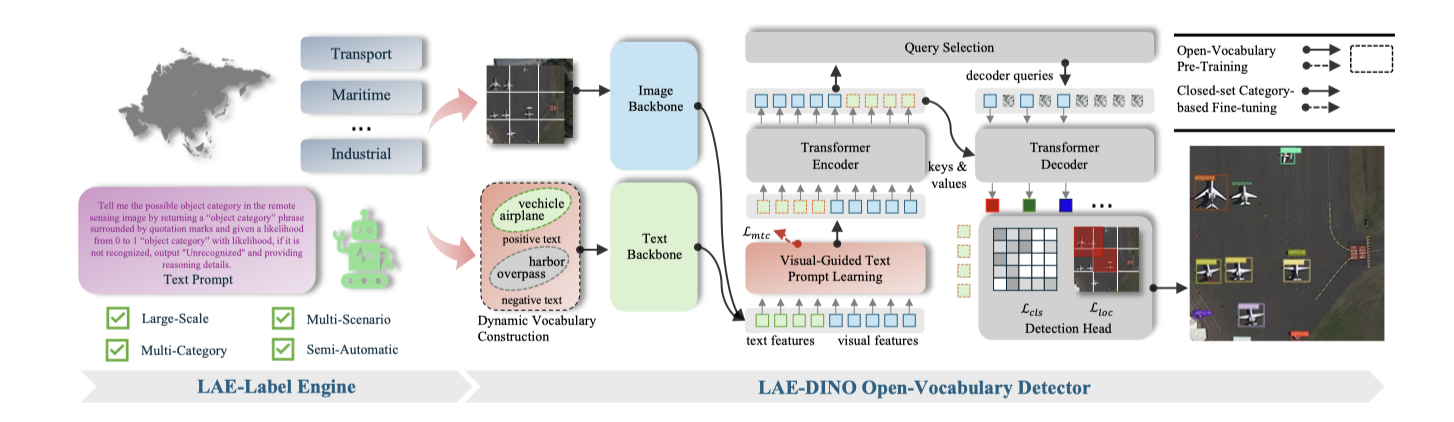

Locate Anything on Earth: Advancing Open-Vocabulary Object Detection for Remote Sensing Community

Locate Anything on Earth: Advancing Open-Vocabulary Object Detection for Remote Sensing Community